AI and Data Privacy in Real Estate CRMs

AI is transforming real estate CRMs, boosting efficiency but raising critical data privacy concerns. Here's what you need to know:

- Adoption Surge: AI-powered CRMs now achieve up to 90% adoption rates, compared to under 30% for traditional systems.

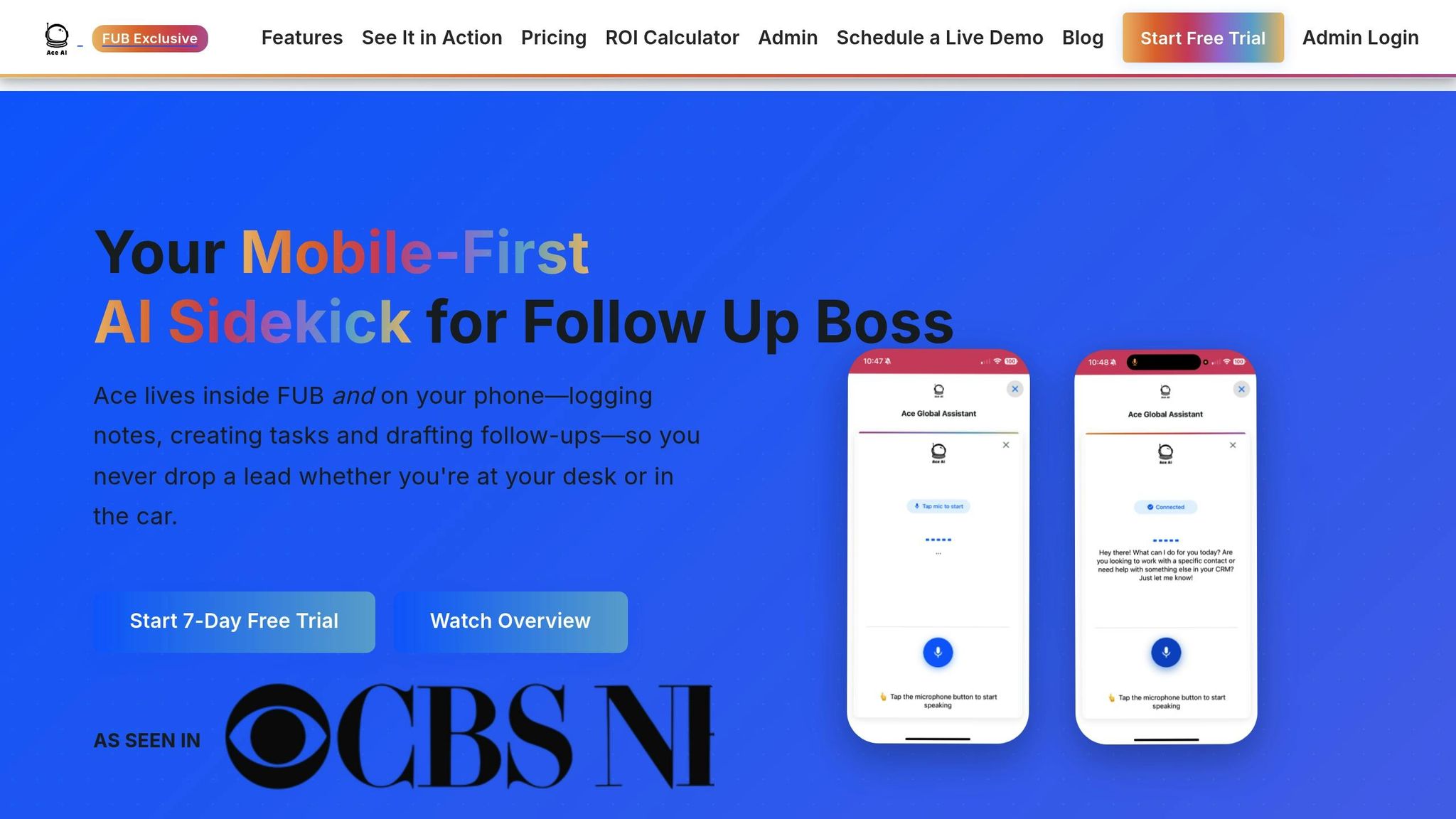

- Efficiency Gains: Tools like Ace AI improve lead generation, engagement (+60%), and conversions (+10%) by automating tasks like predictive lead scoring and personalized outreach.

- Privacy Risks: AI systems collect sensitive data (financial details, personal identifiers) and face risks like cyberattacks, algorithmic bias, and accidental data leaks.

- Compliance Challenges: Navigating regulations like GDPR, CCPA, and Fair Housing Act is essential. Non-compliance can lead to hefty fines, as seen in a $2.3M settlement in 2024.

- Solutions: Platforms like Ace AI address risks with built-in compliance tools, data encryption, anonymization, and secure storage.

Key Takeaway: Real estate teams must balance AI's benefits with robust data privacy measures to protect sensitive information, comply with regulations, and maintain client trust.

Protect Data Security and Privacy with Einstein Trust Layer | Drive Productivity with Einstein AI

Data Privacy Risks in AI-Powered CRMs

AI-powered CRMs are a game-changer for efficiency, but they also bring along serious privacy risks for both agents and clients. Recognizing these risks is key to developing strategies that protect sensitive data. Let’s dive into the specific challenges tied to data collection, storage, and regulatory compliance.

Data Collection and Storage Risks

Real estate CRMs powered by AI gather a wide range of sensitive information - financial details, personal identifiers, and even behavioral data. This makes them attractive targets for cyberattacks, exposing agents and clients to significant risks.

Here’s a reality check: 71% of consumers are protective of their personal data, and 65% believe companies don’t handle it responsibly. Their concerns aren’t unfounded. The real estate industry has seen its fair share of breaches, highlighting just how vulnerable this data can be.

But it’s not just about breaches. AI systems often go further, analyzing browsing histories, social media activity, and search terms to micro-target leads. While this might seem like smart marketing, it raises serious concerns about surveillance. Some platforms even analyze images, videos, and market data to estimate home values, occasionally making invasive assumptions about lifestyle based on demographics.

Rob Stevenson, Founder of BackupVault, underscores the importance of prioritizing privacy:

"Yes, AI can certainly pose a privacy risk if it isn't properly regulated or designed with data protection in mind. One big concern is that AI often requires large amounts of data, which can sometimes include personal or sensitive information. If this data isn't anonymized or securely handled, it opens the door to misuse, whether intentional or through hacking."

The technical side of things doesn’t make it any easier. 55% of experts cite accidental leaks of sensitive data by large language models (LLMs) as a major concern, and over half worry that user prompts could unintentionally expose sensitive information. This is particularly relevant for AI-driven chatbots and automated communication tools in real estate CRMs.

Data storage adds another layer of risk. 80% of data experts agree that AI complicates data security, and 52% highlight AI attacks by cybercriminals as a growing threat. A chilling example is the 2018 Strava incident, where the fitness app’s "heatmap" feature accidentally revealed sensitive user locations, including military bases.

Frank Milia from ITAMG explains why these risks persist:

"It's important to have policies, tools, and training in place that impede users from inappropriately sharing protected data or intellectual property with AI tools... In other words, once a user overshares sensitive information, there is a significant risk of that data being distributed across multiple systems and being used to train the AI model. Even if one could remove the sensitive input, the challenge of confirming data sanitization of the neural network remains."

As these risks mount, staying compliant with privacy regulations becomes an even bigger challenge.

Meeting Privacy Regulation Requirements

Real estate professionals must navigate a maze of privacy laws like GDPR, CCPA, and fair housing regulations. Falling short can lead to hefty fines and legal troubles that could cripple a business.

The regulatory focus on AI is tightening. In May 2024, the U.S. Department of Housing and Urban Development (HUD) issued guidance on how the Fair Housing Act (FHA) applies to AI. This guidance makes it clear: both intentional discrimination and practices with unjustified discriminatory effects are prohibited. This directly impacts how AI can be used for lead scoring, tenant screening, and property valuations.

The risks of non-compliance are real. In November 2024, SafeRent Solutions faced a $2.3 million settlement after its AI-driven tenant screening system was found to discriminate against low-income applicants, particularly Black and Hispanic individuals using housing vouchers. This case illustrates how algorithmic bias can lead to violations of fair housing laws.

One major hurdle is the opaque nature of AI decision-making. Large language models often operate like black boxes, making it hard to explain how decisions - like lead scoring or tenant approvals - are reached. This lack of transparency erodes trust and complicates compliance with regulations that demand clarity in automated decision-making.

To meet these standards, real estate teams must:

- Clearly inform clients when they’re interacting with AI instead of a human.

- Obtain explicit consent for collecting and using personal data.

- Implement systems to track consent, manage data retention, and explain how client data is being used.

Smaller teams face an uphill battle here. Without dedicated compliance resources, they must still establish strong data governance frameworks, conduct regular audits, and document AI decision-making processes - all while keeping up with ever-changing regulations.

How Ace AI Protects Data Privacy in Follow Up Boss CRM

Ace AI takes a proactive approach to safeguarding data privacy, integrating protective measures into the secure architecture of Follow Up Boss. These features directly address potential data risks, offering real estate professionals a secure and reliable framework for managing sensitive information.

Built-In Compliance Tools

Ace AI simplifies the often complex task of staying compliant with regulations like GDPR and CCPA. By automating key processes, it reduces compliance-related challenges for real estate teams by 73%. The system handles consent management, tracks data usage, and maintains detailed audit trails, ensuring that regulatory requirements are consistently met. Additionally, it documents data flows clearly, which not only enhances transparency but also builds trust with clients and satisfies regulatory demands.

Data Security and Encryption Methods

Built on Follow Up Boss's secure infrastructure, Ace AI ensures the protection of sensitive client data through advanced security measures. The platform uses 256-bit encryption for data both at rest and in transit, along with continuous system monitoring. It also meets industry standards, holding certifications such as SOC 2 Type 2, SOC 3, and CASA Tier 2. To further reduce risks, Ace AI anonymizes personally identifiable information (PII) used for AI training. Other security features include two-factor authentication, account verification, and tools to detect unusual login activity. These measures integrate seamlessly into agents' workflows, offering robust security without unnecessary complexity.

Data Anonymization and Storage Limits

Ace AI employs techniques like masking, pseudonymization, and data aggregation to minimize the risk of re-identification. These methods allow data to remain useful for analysis and testing while reducing the likelihood of breaches and lowering storage costs. By enabling consent-free data reuse, the system eliminates the need for extensive secure storage. While anonymization can sometimes affect the utility of data, Ace AI uses additional methods such as data generalization and randomization to retain analytical value. These processes run automatically in the background, letting teams focus on their clients while privacy remains consistently protected.

Best Practices for Real Estate Teams Using AI CRMs

To successfully integrate AI-powered CRMs into your real estate operations, it’s essential to balance automation with data privacy. A structured approach ensures your workflow remains secure and efficient while leveraging AI’s potential.

Setting Up Role-Based Data Access

Role-based access control (RBAC) helps limit data access to only what’s necessary for each role, reducing the chances of data misuse or accidental breaches.

Start by mapping out your data flows and assigning permissions based on job responsibilities. For example, Managers might have full editing capabilities, while Sales Representatives are restricted to viewing certain data. The guiding principle here is the “least privilege” approach - users should only have access to the information they need to perform their tasks.

To further strengthen security, implement multifactor authentication, restrict access by IP address when possible, and schedule periodic reviews to update permissions as roles evolve.

Conducting Regular Privacy Audits

Privacy audits are indispensable for identifying compliance gaps and vulnerabilities in your data handling processes.

Internal audits should focus on how your team manages data, track the flow of information, and consider the legal jurisdictions in which your business operates. These reviews are an opportunity to ensure that AI tools align with your company's privacy policies, especially when handling sensitive client information.

External audits, conducted by third-party experts, provide an unbiased perspective on your privacy practices. These assessments often uncover risks that internal teams might miss. Plan for regular audits and use compliance tools to quickly address any issues that arise.

Training Teams on Privacy Protocols

Privacy training empowers your team to handle AI tools responsibly, ensuring ethical and secure CRM usage.

Create guides, tutorials, and video resources tailored to your team’s specific needs. Use real-world scenarios to highlight best practices, like managing AI-generated listing descriptions to maintain accuracy and comply with MLS standards. Transparency is key - team members should know when and how to disclose AI usage to clients.

"An ethically grounded AI policy is the beacon that guides every team member towards principled innovation." – Stephen McClelland, Digital Strategist, ProfileTree

Incorporate fair housing compliance into training to help staff spot and address bias in AI-driven recommendations. Teach them how to validate AI outputs to ensure equitable service for all clients, regardless of demographics.

Hands-on exercises can also help teams recognize potential threats, enforce data standards, and maintain CRM systems effectively. To keep up with AI advancements, offer ongoing education through self-paced online modules and encourage team collaboration in forums or learning communities. Regular feedback and assessments ensure everyone stays aligned with privacy protocols as they evolve.

sbb-itb-b3b90a6

Conclusion: Balancing AI Innovation and Privacy

Real estate professionals are navigating a complex but exciting landscape where AI innovation meets the critical need for data privacy. By leveraging AI thoughtfully and adhering to privacy standards, the industry can embrace new technologies while safeguarding client trust and meeting regulatory demands.

Key Takeaways for Real Estate Professionals

AI has become an integral part of real estate, with 75% of top U.S. brokerages already using it and 86% of employers anticipating its transformative impact by 2030. These technologies simplify tasks, speed up processes, and enhance client experiences. Tools like Ace AI demonstrate how tailored AI solutions can balance automation with privacy considerations.

To achieve this balance, real estate professionals should focus on:

- Strong data governance: Audit AI systems to limit unnecessary data collection and ensure compliance with privacy regulations.

- Clear consent practices: Obtain explicit permission for personal data use and conduct rigorous testing to prevent biases or discrimination.

- Transparency: Be upfront about how data is handled and ensure clients understand these practices.

While automation can reduce compliance costs by up to 50%, ethical considerations must remain a priority. AI solutions should align with both performance objectives and the ethical standards expected by clients and regulators.

The Future of Privacy-First AI Solutions

The shift toward privacy-first AI is driven by both regulatory requirements and the demand for trustworthy technology. Frameworks like the NIST AI Risk Management Framework (AI RMF) provide a roadmap for adopting responsible AI practices, emphasizing governance, transparency, and accountability. Future developments will likely bring stricter rules around data disclosure and consent.

Leading companies, such as those utilizing Ace AI, are already setting the standard by implementing best practices. These include anonymizing data, limiting access to sensitive information, and maintaining open communication about data usage. Such measures not only ensure compliance but also build a competitive edge by earning client trust.

As AI becomes more integrated into real estate, firms that prioritize robust cybersecurity and continuously update their data protection strategies will be better equipped to counter emerging threats. Transparency will remain key to fostering trust among clients and employees alike. The most effective AI solutions will complement human expertise, offering valuable insights to support decision-making rather than replacing professional judgment. Ace AI's integration into Follow Up Boss showcases how innovation can align with security and compliance.

FAQs

How can real estate agents ensure their AI-powered CRM complies with privacy laws like GDPR and CCPA?

How Real Estate Agents Can Stay Compliant with Privacy Laws

Navigating privacy laws like GDPR and CCPA can feel overwhelming, but following a few key practices can make all the difference. Start by performing regular data audits. This means taking a close look at what personal information you’re collecting, how it’s being used, and where it’s stored. Knowing this is the first step in keeping your data practices above board.

Next, ensure your AI-powered CRM - such as tools like Ace AI - offers strong safeguards. Features like data encryption and secure access controls are must-haves to protect sensitive client information from breaches or unauthorized access.

Another critical step? Always obtain clear and explicit consent before collecting any client data. Alongside this, provide transparent privacy notices that clearly explain how their information will be used. This builds trust and keeps you aligned with legal requirements.

Finally, don’t underestimate the power of education. Regular staff training on privacy regulations and data protection can keep your team sharp and proactive. When everyone understands the importance of safeguarding client data, compliance becomes a team effort.

What are the key data privacy risks real estate teams should consider when using AI tools in their CRMs?

Data Privacy Risks in Real Estate AI Tools

When integrating AI tools into your CRM, it's crucial for real estate teams to be aware of potential data privacy risks. Here are the key concerns to keep in mind:

- Data Breaches: AI systems can become targets for cyberattacks, putting sensitive client and property details at risk.

- Misuse of Personal Data: Many AI tools require access to personal information, raising questions about how this data is collected, stored, and used.

- Compliance Challenges: Ensuring your AI tools align with data protection laws like GDPR or CCPA can be tricky, especially when handling personal data.

To mitigate these risks, choose AI solutions that focus on strong security protocols, clear data usage policies, and compliance with privacy regulations. For instance, Ace AI, specifically designed for the Follow Up Boss CRM, offers secure data management features, helping real estate teams protect sensitive information while streamlining their operations.

How does Ace AI ensure fairness and prevent bias in its AI-driven real estate tools?

Ace AI is dedicated to promoting fairness in its AI-powered real estate tools. It achieves this through strict data management protocols and continuous algorithm oversight, ensuring the models are trained on balanced and representative datasets. This approach helps prevent the reinforcement of historical biases that could result in unfair practices.

The company also emphasizes transparency, clearly outlining how decisions are made to build trust and accountability. By adhering to ethical AI principles and aligning with Fair Housing laws, Ace AI is focused on delivering solutions that are inclusive and fair for both real estate professionals and their clients.